A machine learning model is put to use after users are ready to start responding to requests from it. To ensure safety and scalability, it is essential to establish total seamlessness. Vertex AI has a low total cost of ownership since it is a fully managed service. The absence of over-provisioning of hardware is due to smooth auto-scaling.

The most economical hardware for a given model may be chosen by developers thanks to Prediction Service and a variety of VM and GPU kinds. Contrary to open source, it also has several proprietary backend upgrades that further reduce expenses. Prebuilt components for request-response logging in BigQuery are included into Stackdriver’s out-of-the-box logging capability to enable routine deployment of models from pipelines.

Built-in compliance and security: Users may utilize their own secure perimeter to install models. Your endpoints may be accessed by GCP’s PCSA (pre-closure safety analysis) integration control tool, and your data is always secure.

With a good theoretical understanding of Vertex AI and its key features, now let’s see how Vertex AI looks on the platform followed by working on AutoML.

Follow below steps to open Vertex AI on the platform:

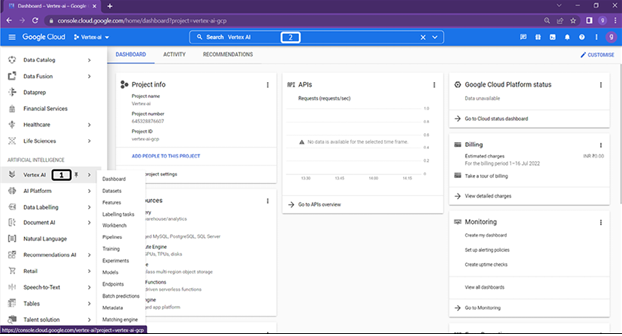

Step 1:

- Vertex AI can be found under Artificial Intelligence. Vertex AI Dashboard.

- Alternatively, Users can type Vertex AI in the search box as seen in Figure 2.1:

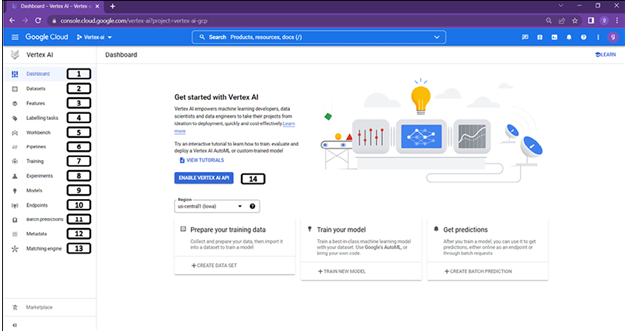

Step 2: Landing page of Vertex AI

The components on the landing page of Vertex AI can be seen in Figure 2.2:

Figure 2.2: Landing page of Vertex AI

Brief description of components on the landing page of Vertex AI are listed below:

- Vertex AI dashboard: Landing page of Vertex AI.

- Datasets: Datasets may be either organized or unstructured. It has controlled metadata, such as annotations (at present only Cloud Storage and BigQuery are supported).

- Feature Store: A centralized repository for organizing, storing, and providing ML features will be provided via the feature store. This resolves the issues of redundant feature development and underuse of high-quality features. A centralized repository makes it apparent who is responsible for creating, storing, and calling features. Feature stores provide high-throughput batch requests and low-latency internet requests.

- Labels: Labelling the data would be the first phase in the machine learning development cycle. Human labellers will assist in completing this labelling task.

- Workbench: Vertex AI Workbench is a single development platform for the whole data science process. Vertex AI Workbench offers managed notebooks (Google managed environment, for easier operations) and user-managed notebooks (for users that need complete control over their environment).

- Pipelines: By coordinating your machine learning process in a serverless fashion and preserving your workflow’s artifacts using Vertex ML Metadata, Vertex AI Pipelines enables you to automate, monitor, and regulate your ML systems.

- Training: Vertex AI’s main model training approach is comprised of training pipelines.

- Experiments: When creating a model for a problem, it is important to determine which model is suitable for that specific use case. Vertex AI Experiments gives you the ability to monitor, analyze, compare, and search across several ML Frameworks and training settings, including TensorFlow, PyTorch, and scikit-learn.

- Models: ML models contains information that were either directly loaded or created through a training pipeline. A machine learning solution termed a model serves as a container for versions, or real implementations of models.

- Endpoints: Predictions are served by deploying the trained model to an endpoint. Disambiguation is carried out depending on the request, and it may contain one or more models and variants of those models.

- Batch predictions: For big datasets that would take too long to process using an online prediction method, Vertex AI Batch Prediction is an alternative. It offers an effective, serverless, scalable solution for asynchronous responses. Users don’t need to deploy the model to an endpoint in order to get batch predictions from the model resource.

- Metadata: You may query metadata to help assess, debug, and audit the performance of your machine learning system and the artifacts that it generates. Metadata also allows you to record the metadata and artifacts that are created by your ML system.

- Matching engine: Matching Engine offers the most advanced vector-similarity matching (also known as approximate closest neighbour) service in the market, as well as market-leading techniques to train semantic embeddings for similarity-matching use cases.

- Enable the Vertex AI APIs to continue working.

This book covers various entities of Vertex AI with practical examples. We will start with AutoML of Vertex AI.