The method through which we measure the quality of a model’s predictions is called model evaluation. To achieve this, AutoML evaluates the performance of the trained model on a test split of dataset, 10% of the training data will be used for evaluation (refer Figure 2.13).

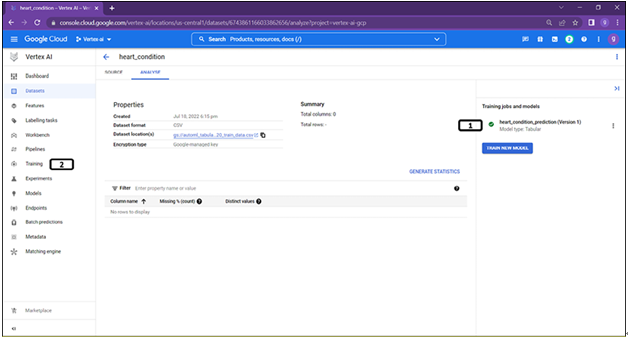

Step 1: Checking model training

Status of training can be seen in Figure 2.17:

Figure 2.17: Training complete

- Once the model training is completed, it will be highlighted with a green tick.

- Model training will also be listed in the training section of Vertex AI (click on it).

Step 2: Model training results

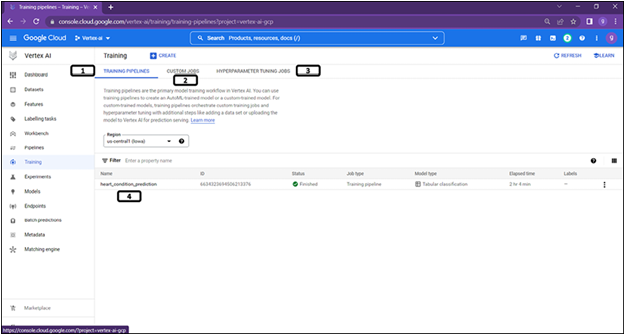

Training jobs will be listed under training section as shown in Figure 2.18:

Figure 2.18: Training job listed under training job

- Training pipelines will list training jobs of AutoML.

- Custom Jobs will list training jobs of custom models.

- Hyperparameter tuning jobs will list training jobs of custom models with hyperparameter tuning.

- AutoML model trained in previous steps (click on it). Trained model will also be listed under model section of Vertex AI.

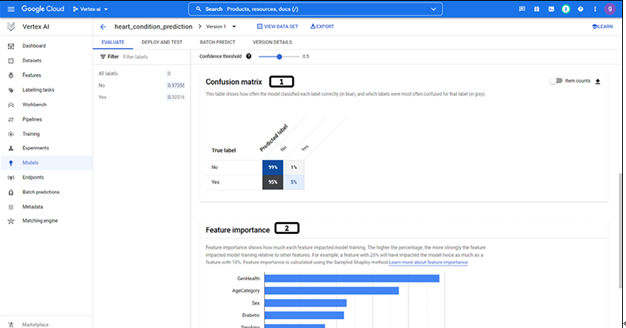

Step 3: Model evaluation

Clicking on the model will navigate to model section as shown in Figure 2.19:

Figure 2.19: Trained model evaluation

- When a model is trained, it will be the first version of the model. When multiple models are trained it provides options to select the specific version of the model.

- Trained model can be exported as a Tensorflow package, and model can be exported to cloud storage.

- Evaluate provides details about the class information about the target variable. Users can select any specific class to check the evaluation metrics for each of the classes separately.

- Different evaluation metrics are listed along with the visual information about few metrics. Confidence threshold can be varied to inspect the graphs at different levels of confidence.

- Graphical representation of the evaluation metrics (varies as per the confidence threshold).

- Batch predict provides options for batch prediction.

- Deploy and test provides options for the online prediction.

- Version details provides various details of the model version (click on Version details).

Step 4: Confusion matrix and feature importance

Scrolling down will have information about other metrices as shown in Figure 2.20:

Figure 2.20: Trained model evaluation

- Confusion matrix, click on the item counts to get the matrix with actual counts.

- variable importance information (variable importance is calculated using sampled Shapley method).

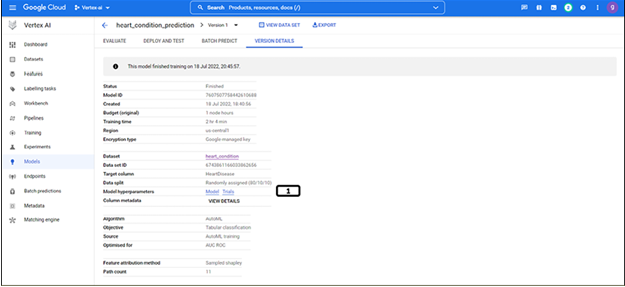

Step 5: Model version details

More details on the model will be displayed as shown in Figure 2.21:

Figure 2.21: Trained model details

- Various details of the model are listed.

- Detailed log messages are available in the models and trials.